ESP32 Edge AI Camera

by Mukesh_Sankhla in Teachers > University+

53821 Views, 346 Favorites, 0 Comments

ESP32 Edge AI Camera

In this project, I’ll walk you through creating an ESP32 Edge AI Camera system that simplifies data collection and model deployment for AI tasks. This system combines the power of the ESP32-S3 microcontroller with a camera module and a 3D-printed magnetic case, making it compact, portable, and easy to mount on various surfaces.

The unique feature of this project is the streamlined data capture and upload process to Edge Impulse. Using a custom-built Arduino code for the ESP32 and a Python-based web interface running on Flask, you can quickly collect, preview, and upload labeled image data to Edge Impulse.

Here's how it works:

- Run the Python web interface and connect to the ESP32 via USB.

- Enter your Edge Impulse API key, select Training or Testing, and optionally specify a label for the images.

- Click the Capture and Upload button.

- The ESP32 receives the command via USB, captures an image, and sends it back to the PC.

- The image is saved, previewed on the web interface, and uploaded to Edge Impulse with the desired settings.

This system eliminates the manual tasks of connecting SD cards, transferring images, and labeling data, making it perfect for rapid prototyping and dataset generation.

By the end of this project, you’ll learn how to:

- Capture data using an ESP32 camera.

- Train an Edge Impulse model with the collected data.

- Deploy the trained model back onto your ESP32 for real-time AI inference.

Supplies

1x FireBeetle 2 Board ESP32-S3 or FireBeetle 2 Board ESP32-S3 With External Antenna

8x 5mm Magnets

1x Quick Glue

Sponsored By NextPCB

This project was made possible, thanks to the support from NextPCB, a trusted leader in the PCB manufacturing industry for over 15 years. Their engineers work diligently to produce durable, high-performing PCBs that meet the highest quality standards. If you're looking for top-notch PCBs at an affordable price, don't miss NextPCB!

NextPCB’s HQDFM software services help improve your designs, ensuring a smooth production process. Personally, I prefer avoiding delays from waiting on DFM reports, and HQDFM is the best and quickest way to self-check your designs before moving forward.

Explore their DFM free online PCB Gerber viewer for instant design verification:

CAD & 3D Printing

To begin, I designed a custom case for the ESP32-S3 camera using Fusion 360. The design includes:

- A magnetic mounting system for easy attachment.

- Extensions for the side buttons, allowing access to the Reset button and a programmable button.

You can either modify the design using the provided Fusion 360 file or directly download and 3D print the following parts:

- 1x Housing.stl

- 1x Cover.stl

- 2x Button.stl

Additionally, I’ve included a simple mounting plate in the design. You can customize it or create your own to suit your needs.

I printed the case using Gray and Orange PLA filament on my Bambu Lab P1S. For the cover, I used a filament change technique to achieve a two-tone color effect. This adds a unique touch to the design while maintaining the functional integrity of the case.

Antenna Assembly

Simply connect the antenna that comes with the ESP32-S3 to the designated port on the ESP32-S3 board.

ESP32 Housing Assembly

- Take the 3D printed housing and the two button extensions.

- Insert the buttons into their designated slots on the housing.

- Place the ESP32-S3 board inside the housing, making sure the Type-C port aligns with the opening in the case.

Camera Cover Assembly

- Take the camera module included with the ESP32-S3, the 3D printed cover, and quick glue.

- Carefully apply a small amount of quick glue to the camera module.

- Attach the camera module to the hole on the cover and ensure it is correctly oriented and securely positioned. Be cautious with the glue to avoid any contact with the lens or delicate parts of the camera.

Final Assembly

- Take the housing assembly and the cover assembly.

- Connect the camera module cable to the ESP32 board.

- Neatly tuck the antenna wire and camera cable inside the housing to ensure nothing is pinched or obstructed.

- Snap the cover onto the housing to close it securely.

- Your ESP32 Edge AI Camera is now fully assembled.

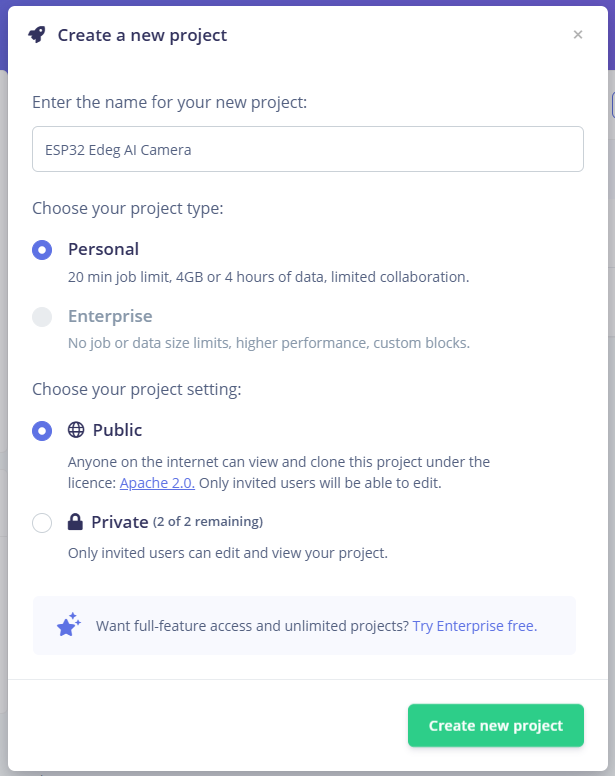

Edge Impulse Project Setup

1. Create an Edge Impulse Account

- Go to edgeimpulse.com and log in to your account.

- Click on "Create new project."

- Enter a name for your project.

- Choose whether to keep your project Private or Public.

- Click on "Create".

What is Edge Impulse

Edge Impulse is a platform designed for building, training, and deploying machine learning models on edge devices like microcontrollers and embedded systems. It simplifies the process of working with data, creating ML models, and deploying them directly to your hardware.

The key features of Edge Impulse include:

- Data Collection and Management: Gather and label your data efficiently.

- Model Training: Train custom machine learning models tailored to your use case.

- Deployment: Deploy the trained model to edge devices with optimized performance.

Data Acquisition Panel

The Data Acquisition Panel in Edge Impulse is where you upload and manage your data. Here’s how it works:

- You can upload images, audio, or sensor data directly from your device or through integrations like APIs.

- Data can be organized into training and testing sets for effective model evaluation.

- Labels can be added to your data, helping Edge Impulse understand and categorize the input.

- Live data acquisition is also supported via connected devices, which simplifies gathering real-time input.

Types of Data and How to Collect Them

- You can upload pre-collected data, such as images, audio, or sensor readings, directly to the platform.

- The Edge Impulse Data Forwarder allows you to send live data from connected devices like the ESP32 directly to the platform.

- Use the Edge Impulse API to programmatically upload data from external sources.

Upload Arduino Code

1. Download the Code

- GitHub Repository: Download the full repository

- Extract the files and locate the ESP32_Code.ino file in the project folder.

- Open Arduino IDE on your computer.

- Navigate to File > Open and select the ESP32_Code.ino file from the extracted folder.

2. Install ESP32 Board Manager

If you haven’t already configured the ESP32 environment in Arduino IDE, follow this guide:

- Visit: Installing ESP32 Board in Arduino IDE.

- Add the ESP32 board URL in the Preferences window:

- Install the ESP32 board package via Tools > Board > Boards Manager.

3. Install Required Libraries

You need to install the following libraries for this project:

DFRobot_AXP313A Library

- Download DFRobot_AXP313A

- In Arduino IDE, go to Sketch > Include Library > Add .ZIP Library and select the downloaded DFRobot_AXP313A.zip file.

4. Upload the code

Connect the ESP32 Edge AI Camera to PC using Type-C USB cable.

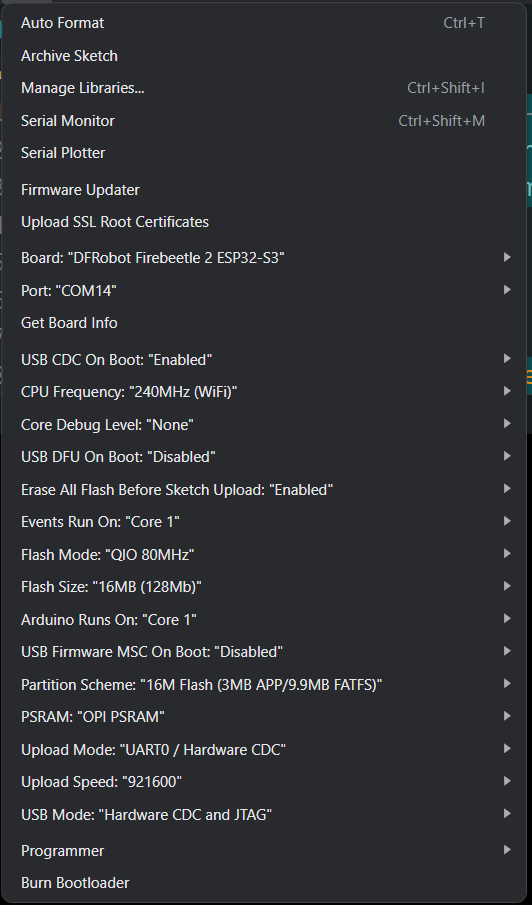

Go to Tools and set up the following:

- Board: Select DFRobot FireBeetle 2 ESP32-S3.

- Port: Select the COM port associated with your ESP32 board.

Click the Upload button (right arrow) in the Arduino IDE

Run Python Code & Capture Images

Setting Up

- Mount the Camera:

- Attach the magnetic mounting plate to your laptop or desired surface using double sided tape.

- Mount the AI camera onto the plate and connect it to your PC using a USB cable.

- Prepare the Code:

- Open the downloaded project folder in VS Code.

- Ensure that Python 3 is installed on your system.

- Install the required Python modules by running:

- Run the Web Interface:

- Start the Flask web application by running:

- Open your web browser and navigate to the default address: http://localhost:5000.

Using the Web Interface

- Select the COM Port:

- Choose the COM port where your ESP32 camera is connected.

- Input API Key:

- Enter your Edge Impulse API key to enable data uploads.

- Choose Mode:

- Select Train mode to collect training data.

- Select Test mode to gather test data.

- Add Labels (Optional):

- Enter a label for your dataset.

- Capture and Upload:

- Click the Capture and Upload button to capture an image using the ESP32.

- The image will be:

- Captured by the camera.

- Previewed in the web interface.

- Uploaded to Edge Impulse with the specified label and mode.

When a label is provided, a bounding box will be drawn around the image with the given label name:

This is useful when the object of interest occupies the entire image, as the bounding box defaults to cover the entire image resolution.

However, if you leave the label field empty, no bounding box will be drawn, and the image will not have a label assigned.

Finger Counter With ESP32 AI Camera

To demonstrate the capabilities of the ESP32 Edge AI Camera, we’ll create a simple project: Finger Counter with ESP32 AI Camera. This project will detect the number of fingers shown in front of the camera and output the corresponding count (e.g., showing one finger will result in "Count 1").

Data Collection

- Use the ESP32 Edge Capture system to collect images for the training and testing datasets.

- Capture as many images as possible to ensure accuracy. A good rule of thumb is to maintain a 5:1 ratio between training and testing datasets (e.g., for every 50 training images, collect 10 testing images).

- Include a variety of lighting conditions, angles, and backgrounds to make the model robust.

Dataset Examples

- One Finger: Capture images with one finger raised.

- Two Fingers: Capture images with two fingers raised.

- Continue capturing images for three, four, and five fingers.

- Include some empty images (no fingers shown) to represent a "zero count."

This step ensures that the model has sufficient data to learn and perform well during real-time inference.

Labeling the Dataset

After collecting all the images, it’s time to label them in Edge Impulse. Follow these steps:

- Go to the Labeling Queue section.

- Label each image according to the number of fingers shown

- Double-check each labeled image to ensure accuracy, as incorrect labels can reduce the model's performance.

Create Impulse

Navigate to the "Impulse Design" section in Edge Impulse.

Set up the Image Data block with:

- Input axes: Image

- Image width: 48 pixels

- Image height: 48 pixels

- Resize mode: Fit shortest axis

Add an Object Detection block under Learning Blocks.

- Input Feature: Select the Image block.

- Output Features: Define the labels as 1, 2, 3, 4, 5.

Once configured, click Save Impulse.

Generate Data Features

Set Processing Parameters:

- Under the Parameters section:

- Ensure the Image Color Depth is set to Grayscale or another value suitable for your use case.

- Click Save Parameters to apply the settings.

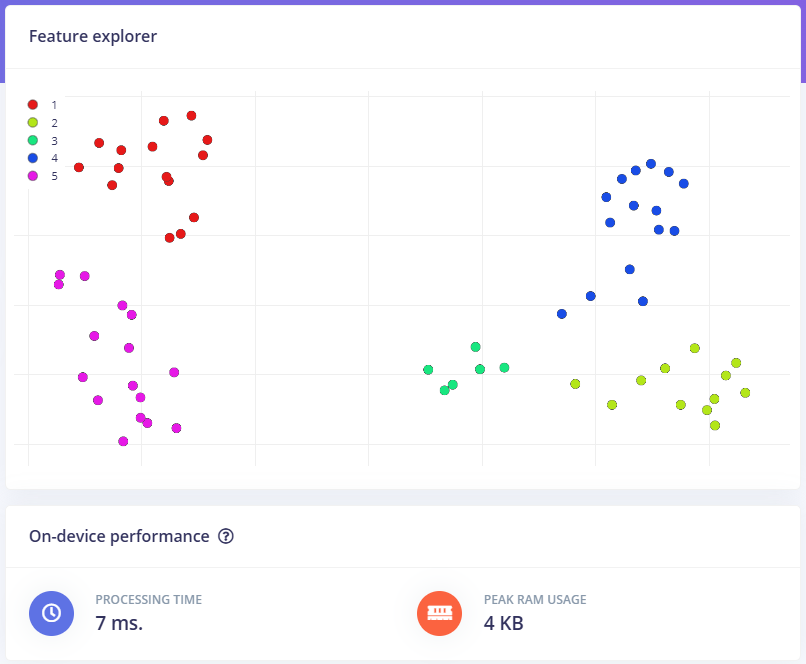

Generate Features:

- Switch to the Generate Features tab.

- Review the summary of the training dataset:

- Data in training set: Number of items (e.g., 64 items as shown in the screenshot).

- Classes: Check the labels/classes associated with your dataset (e.g., 1, 2, 3, 4, 5).

- Click the Generate Features button to process the data.

Analyze Feature Output:

- After processing, view the Feature Explorer on the right side of the page.

- The scatter plot in the Feature Explorer visualizes how different classes are separated based on the generated features.

- Each color corresponds to a specific class.

- Ensure the features are well-separated for each class; this indicates the dataset is suitable for training.

Training Model

Navigate to Object Detection:

- In Edge Impulse, go to Impulse Design > Object Detection.

Set Training Parameters:

- Number of Training Cycles: 30

- Learning Rate: 0.005

- Training Processor: Select CPU

- Enable Data Augmentation: Check the box for data augmentation (recommended for improving model generalization).

Choose the Model:

- Click on "Choose a different model" and select FOMO (Faster Objects, More Objects) MobileNetV2 0.35 from the list.

- Confirm your selection.

Train the Model:

- Click "Save & Train" to start the training process.

Understanding the Results:

After training, you will see the following outputs:

- F1 Score: 82.8%

- Indicates the model's accuracy in identifying objects across all classes.

- Confusion Matrix:

- This matrix shows the model's performance for each class.

- Green cells represent correct predictions, and red cells indicate misclassifications.

- Metrics (Validation Set):

- Precision: 0.75 – The accuracy of the positive predictions.

- Recall: 0.92 – The ability of the model to find all relevant instances.

- F1 Score: 0.83 – A balance between precision and recall.

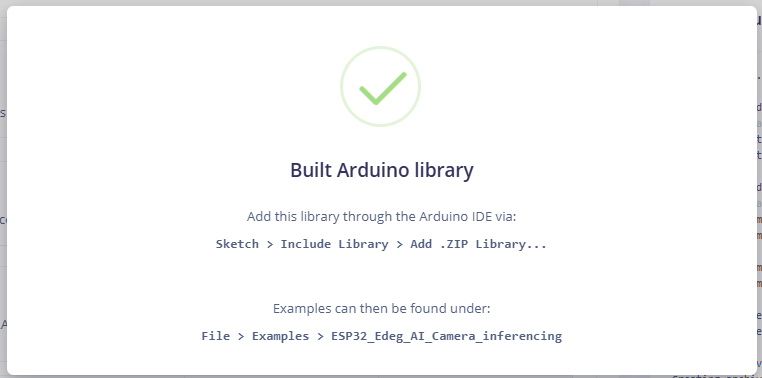

Deploying Model

The deployment process involves creating an Arduino-compatible library from your trained Edge Impulse model.

1. Generate the Deployment Files

- Navigate to the Deployment tab in your Edge Impulse project.

- Under the deployment options, select Arduino Library.

- Make sure the Quantized (int8) option is selected (this ensures the model is optimized for the ESP32).

- Click Build to generate the library.

- After the build completes, download the generated .zip file for the Arduino library.

- In Arduino IDE, go to Sketch > Include Library > Add .ZIP Library and select the downloaded zip file.

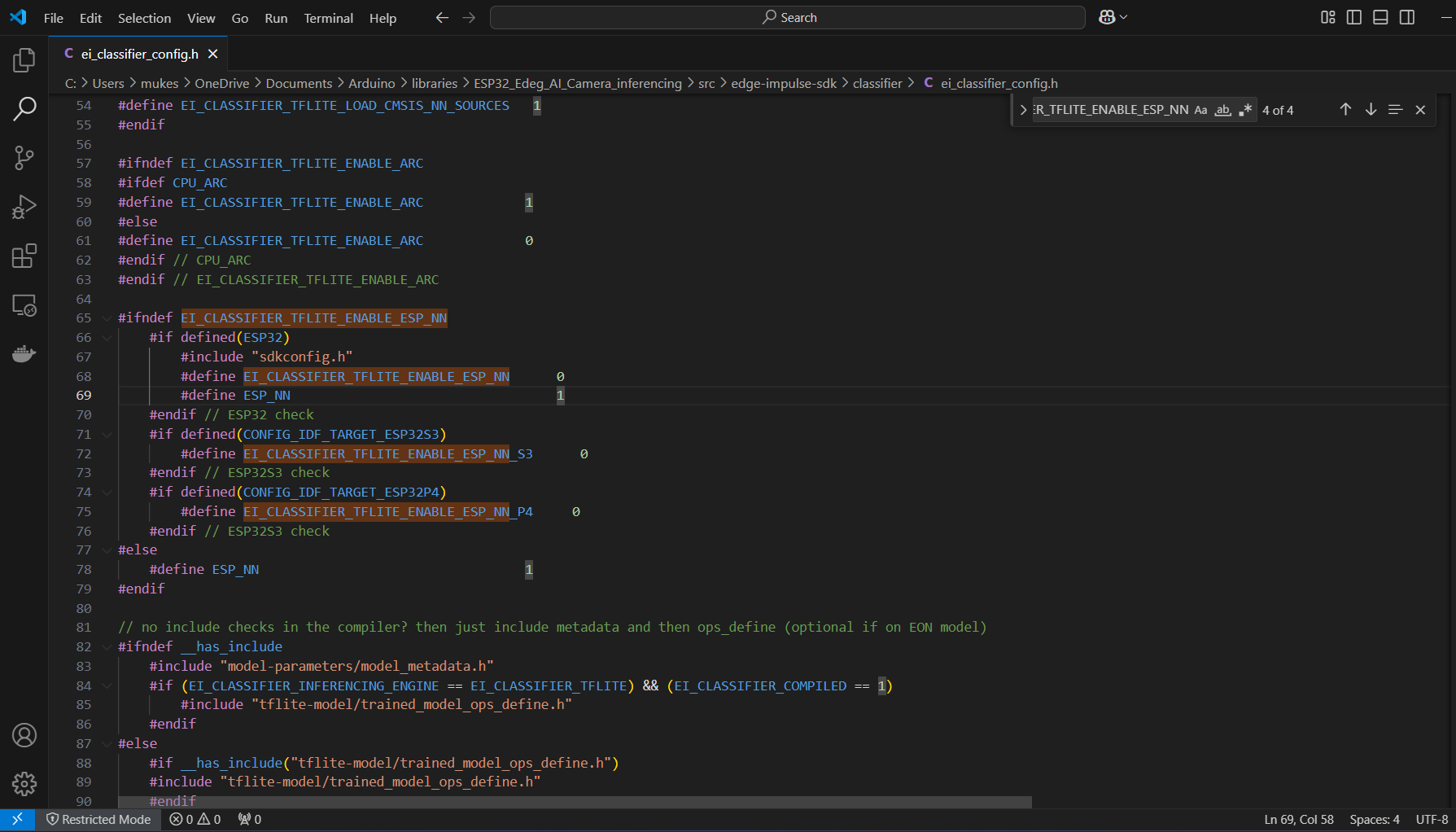

2. Modify the ei_classifier_config.h File

- Navigate to the ei_classifier_config.h file inside the extracted library folder:

- Path:

- Documents\Arduino\libraries\ESP32_Edeg_AI_Camera_inferencing\src\edge-impulse-sdk\classifier\ei_classifier_config.h

- Open this file in a text editor (like Notepad or VS Code).

- Find the following line:

- Change it from 1 to 0:

Why this change?

- This setting disables the ESP-NN (Neural Network acceleration) for TensorFlow Lite, which can sometimes cause issues with certain models on the ESP32. Disabling it helps ensure the model runs properly.

- Save the file after making this change.

Upload the Code

- Copy the provided code and paste it in the Arduino IDE

- Select the correct board (DFRobot FireBeetle 2 ESP32-S3) in the Tools menu of the Arduino IDE.

- Select the correct Port for your device.

- Click Upload to flash the code to your ESP32.

If you are deploying your own Edge Impulse model, you need to ensure that the correct model is linked in the code.

Find this line in the code:

and change it as

Output Should look Like This:

Conclusion

In this article, we walked through the complete process of building an object detection model using Edge Impulse, from setting up the device and collecting data to creating impulses, generating features, and preparing the dataset for training. Each step highlights the simplicity and efficiency of Edge Impulse in enabling developers to build edge AI solutions tailored to their specific applications.

By generating features and visualizing them through the Feature Explorer, we ensured that the data was well-prepared and distinguishable, a critical step in achieving accurate and reliable model performance. Additionally, the platform's metrics for on-device performance, such as processing time and memory usage, make it easier to optimize models for deployment on resource-constrained devices like the ESP32 Edge AI Camera.

A key highlight of this project was the integration of the custom tool you developed, which streamlined specific tasks within the Edge Impulse workflow. This tool significantly enhanced efficiency by automating repetitive processes, improving data preprocessing, and providing deeper insights into the collected data. Its inclusion demonstrates how custom solutions can complement existing platforms to achieve even better results.

Edge Impulse provides a powerful, user-friendly interface that allows anyone, regardless of prior AI experience, to create machine learning models for real-world applications. With the additional support of your custom tool, the workflow becomes even more robust, paving the way for advanced and scalable AI-powered projects.