EcoSort

In today’s world, proper waste management is more important than ever. What if there was a way to know how to sort trash easier, faster, and more efficient? Use EcoSort, a robot designed to help categorize and sort your waste automatically. Using a Momento camera and AI, EcoSort captures images of trash inside a box, identifies what type of waste it is—whether it's Landfill, Compost, Hazard, Plastic, Metal, Paper, Glass, or Organic—and categorizes it accordingly.

Once the trash is sorted, EcoSort sends the data through MQTT to a Pico W board, which triggers corresponding lights and audio, informing you of the trash type. This robot makes sorting waste not only smarter but also more interactive. Follow along to learn how to build your own!

Supplies

x1 Adafruit NeoPixel LED Strip w/ Alligator Clips - 60 LED/m - 0.5 Meter Long - Black Flex

x1 Breadboard

x1 MEMENTO - Python Programmable DIY Camera - Bare Board

(this guide doesn't use the kit, but if you want to skip some steps with 3D prints you may also want to get (x1) Adafruit MEMENTO Camera Enclosure & Hardware Kit)

x1 JST PH 2mm 3-pin Plug-Plug Cable - 100mm long or x2 JST PH 2mm 3-pin Plug to Color Coded Alligator Clips Cable

x1 Copper Foil Tape with Conductive Adhesive - 6mm x 15 meter roll

x2 Small Alligator Clip to Male Jumper Wire

x3 Premium Male/Male Jumper Wires - 20 x 3"

x1 Lithium Ion Battery - 3.7V 2000mAh

x1 Mini Speaker

x4 M3x20 bolts

x3 M3 nuts

x2 small velcros

x1 USB-C Charging Wire

Lasercuts & 3D Printouts

Lasercut and Assemble Boxes

To start this project, download the files attached below, which include everything you need for assembly.

- Prepare the Wooden Base

- The wooden box serves as the base of the project. Start by assembling this wooden box—this will support the acrylic box that will be attached later.

- Drill a Hole for Wires

- On one side of the wooden box, drill a hole. This will allow you to pass the wires from the light strips through the box, ensuring a clean and organized setup.

- Assemble the Acrylic Box

- The acrylic box will fit inside the wooden box. The gap between the wooden and acrylic boxes is important as it will accommodate the light strips later in the project. Ensure that there is enough space to fit the lights comfortably.

- Insert the Acrylic Box

- Once the hole is drilled, place the acrylic box inside the wooden box. You can secure the acrylic box in place using a hot glue gun to hold everything together.

With the base structure ready, you’re now prepared to proceed with the next steps of the project!

3D Printouts

Now it's time to do some 3D printing.

- Download the STL Files

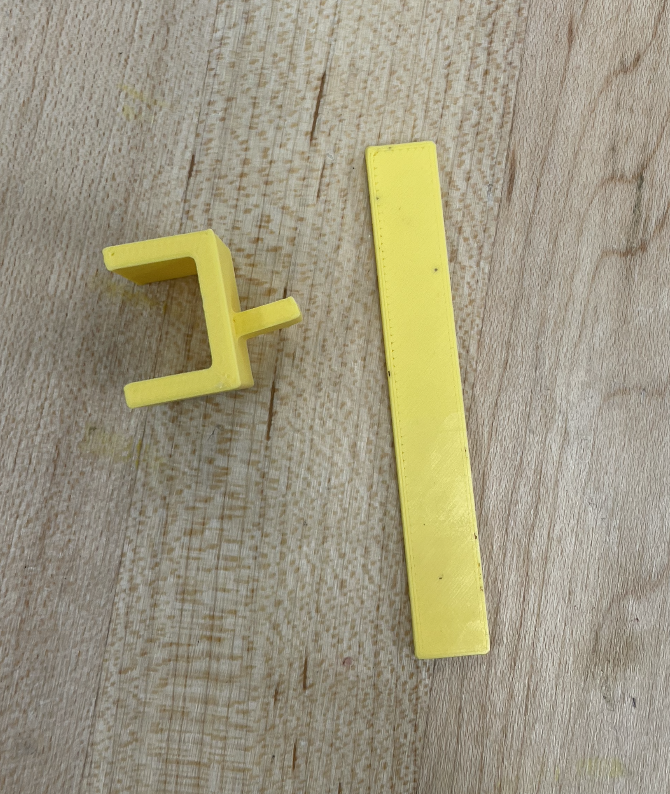

- First, download the STL files attached below for the parts you'll need to print. The two parts here to hold our project is the yellow camera holder, which will hold your Momento camera securely in place.

- Print the Yellow Camera Holder

- Using your 3D printer, print the yellow camera holder. This part will be essential for holding up the camera.

- The holder is 4mm bigger than the camera height so that it could fit in easily with the velcro stickers.

- Assemble the Camera Holder

- Once printed, assemble the camera holder by using superglue or a hot glue gun to secure the pieces together. This will create a stable base for the camera.

- Add two small velcro stickers on the inside the camera holder.

- Print the Black Printout

- The black printout should be placed at the front of the Momento camera. This is designed to perfectly fit the camera and the holes.

Once your camera holder is assembled and the black printout is attached, you’re ready to proceed with integrating the camera into the robot’s system!

Downloads

Assemble Camera

Now that you’ve printed and assembled the camera holder, it’s time to put together the Momento camera. Follow the steps below to ensure everything is connected and ready for action:

- Insert the SD Card

- Begin by inserting the SD card into the camera.

- Connect the Slots for SD Card to Work

- To ensure the SD card works, you need to connect the two slots right next to it using a plug-plug cable. If you don’t have a plug-plug cable, you can use two plug-alligator cables. Clip and wrap them with copper tape for added security and a more stable connection.

- This is the most important part as without the connection to the SD card, there will be no where or way to process retrieve and process images.

- Add the Battery

- Insert the battery into the designated slot to power the camera.

- Connect to Your Laptop

- Use a USB-C cable to connect the camera to your laptop. This will allow you to start coding and communicate with the camera for setup.

- Attach the Black 3D Prints

- Use four M3x20 nuts and bolts to attach the black 3D prints to the camera. These parts will ensure the camera is secured properly within the holder.

- Attach Velcro to Secure the Camera

- Attach two small velcro strips to the middle bottom of both the front and back of the camera. These will help secure the camera to the camera holder, allowing it to stay in place during operation.

Additional Step: attach the camera holder with the camera (the velcros should help)!

With the camera assembled, you're ready to continue integrating it into your robot’s system!

Code the Camera

You can copy the code below into your code.py. However, here's some important codes to understand!

- Imports and Setups

- Importing libraries for file I/O, networking, image handling, display output, MQTT communication, and camera control.

- Configuration and Initialization

- text_scale: Scale for the text displayed on the screen.

- trash: A template string to ask OpenAI’s model to classify trash types.

- prompts: A list of classification prompts for OpenAI. In this case, there's only one prompt.

- num_prompts, prompt_index, prompt_labels: Variables to manage the prompt system for cycling through different classification tasks.

- Image Encoding Function

- This function reads an image from a file path and converts it into base64 encoding, which is required for sending images through OpenAI's API.

- Text Display on Screen

- Displays text on the screen by creating a rectangular background and wrapping the text to fit within the display area. It also adjusts the formatting for different prompt types.

- Image Classification via OpenAI API

- Sends the image (encoded in base64) to OpenAI's API with the appropriate prompt. It then processes the returned classification result.

- Replace the API-KEY with your actual OpenAI API key. If you don't have that, you need to make an account in OpenAI and create a new secret key.

- The returned classification text is used to determine the type of trash and sends messages accordingly.

- This section saves the text result from OpenAI into a text file, using the same filename as the image but with a .txt extension. The results are appended to the file.

- Sending Classification Results via MQTT

- Based on the classification result (TEXT), this block of code sends the type of trash to the MQTT broker. Each category (e.g., "Organic", "Plastic") has its own message published to the light_sound_feed feed.

- Loading and Displaying Images

- WiFi & MQTT Connection

- Enter your own wifi ssid and password in the wifi.radio.connnect() in order for your camera to connect to OpenAI and process images.

- Configures the MQTT client to connect to Adafruit IO. It subscribes to feeds for interaction with the system.

- light_sound_feed: the feed we're controlling and sending the data over to.

- Main Loop (can see more in the actual code)

- The main loop handles capturing images, displaying messages, handling button presses (for cycling through prompts), and sending requests to OpenAI. It also manages interactions with MQTT feeds.

The code provides a full flow for interacting with a camera, sending images to OpenAI for classification, displaying results on a screen, and sending messages via MQTT based on those results. Let me know if you want to dive deeper into any specific section!

Downloads

Adafruit IO Dashboard

To publish data and retrieve it, you’ll create an Adafruit IO dashboard with momentary buttons. Follow these steps to set it up:

1. Sign in to Adafruit IO

- Go to adafruit.com and log in to your account.

- If you don’t have an account, create one and sign in.

2. Access Your Dashboard

- Navigate to the IO section in the site header.

- Click Dashboards and then open Dashboard Settings (on the right side).

- Select Create New Block to start adding controls.

3. Create Movement Buttons

- Select Block Type: Choose Momentary Button as the block type.

- Enter Feed Name: Create a new feed called move_feed.

- Set Button Titles and Values:

- Add buttons with the titles: Organic, Glass, Paper, Landfill, Metal, Plastic, Hazard, Compost

- Change the colors accordingly. The colors are based on the universal trash bin system; however, you may want to change it up a little.

- For each button:

- Set the Button Text and Press Value to match the title (e.g., "organic," "glass").

- Ensure the Press Value is written in lowercase to align with the code.

This is the IO dashboard for reference: https://io.adafruit.com/parkbpx/dashboards/camera-control.

Code the Actions (receiver)

Now, we need to input the code into the receiver, or the pico w, which is going to be responsible for lighting up the box and playing sounds.

- Configure the settings.toml File

- Edit the file to include your Wi-Fi credentials and Adafruit IO settings. Copy and paste the following template, replacing the placeholders with your actual details:

- Replace PLACE THE NAME OF YOUR WIFI with your Wi-Fi network name (SSID).

- Replace PLACE THE PASSWORD OF YOUR WIFI with your Wi-Fi password.

- Replace PLACE YOUR AIO USERNAME and PLACE YOUR AIO KEY with the credentials from the yellow key icon on the dashboard.

- Code Import and Setups

- Import necessary libraries for hardware control (LEDs, audio), network communication (Wi-Fi, MQTT), and file handling.

- Hardware Initialization

- NeoPixel Strip:

- Controlled via GPIO pin GP15, with 30 LEDs.

- Brightness is initially set to maximum (1.0).

- Audio Output:

- Audio output is set up on GPIO pin GP16.

- Path: Directory for MP3 files. Download the sounds.zip and drag it into your CIRCUITPYTHON to play the sounds.

- Play Sound and Lights

- Set the LED strip to the specified color and brightness.

- Open the MP3 file and play it using the audio object.

- Wait until the audio finishes playing before turning off the LED strip.

- MQTT Callback Functions

- Fetches Adafruit IO username and key from environment variables in settings.toml using os.getenv.

- Constructs the MQTT feed name light_sound_feed.

- Connected: Subscribes to the light_sound_feed upon connecting to Adafruit IO.

- Disconnected: Logs a message when disconnected.

- Messages

- Executes actions based on the MQTT message received:

- Each message corresponds to a trash type (e.g., "organic," "glass") and triggers the activate function with specific settings

- Connection to WiFi & Dashboard

- Connects to a Wi-Fi network using credentials from environment variables.

- Initializes the MQTT client using:

- Broker and port from environment variables.

- Authentication with Adafruit IO username and key.

- Socket pool for network communication.

- Main Loop

- Sends an initial MQTT request to fetch the latest state of the light_sound_feed.

- Continuously listens for MQTT messages in the main loop.

Downloads

ASSEMBLE EVERYTHING

Now, the last step: put everything together!

- Squeeze in the light strips

- Carefully insert the light strips into the gap between the wooden and acrylic box.

- Tip: It might be challenging—go slowly to avoid damaging the strips or bending them too sharply.

- Make sure the wires of the light strips come out through the pre-made holes in the box.

- Wire in

- Light Strips

- Use 3 male-to-male jumper wires for the connections:

- Black wire: Connect to the GND pin on the breadboard.

- Red wire: Connect to the 5V pin.

- White wire: Connect to GP15 on the Pico W.

- Speaker

- Use 2 male-to-alligator wires:

- Bottom speaker wire: Attach to GND on the Pico W.

- Top speaker wire: Attach to GP16.

- Optional: Attach the Camera Holder

- If you have the camera holder and want to secure it, position the holder at the top of the box

- Use a glue gun to fix it in place

- However, if you would like to just hold the camera holder with your hands, that's perfectly fine!

Done!